Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

DeepSeek, an AI offshoot of Chinese quantitative hedge fund High-Flyer Capital Management focused on releasing high performance open source tech, has unveiled the R1-Lite-Preview, its latest reasoning-focused large language model, available for now exclusively through DeepSeek Chat, its web-based AI chatbot.

Known for its innovative contributions to the open-source AI ecosystem, DeepSeek’s new release aims to bring high-level reasoning capabilities to the public while maintaining its commitment to accessible and transparent AI.

And the R1-Lite-Preview, despite only being available through the chat application for now, is already turning heads by offering performance nearing and in some cases exceeding OpenAI’s vaunted o1-preview model.

Like that model released in September 2024, DeepSeek-R1-Lite-Preview exhibits “chain-of-thought” reasoning, showing the user the different chains or trains of “thought” it goes down to respond to their queries and inputs, documenting the process by explaining what it is doing and why.

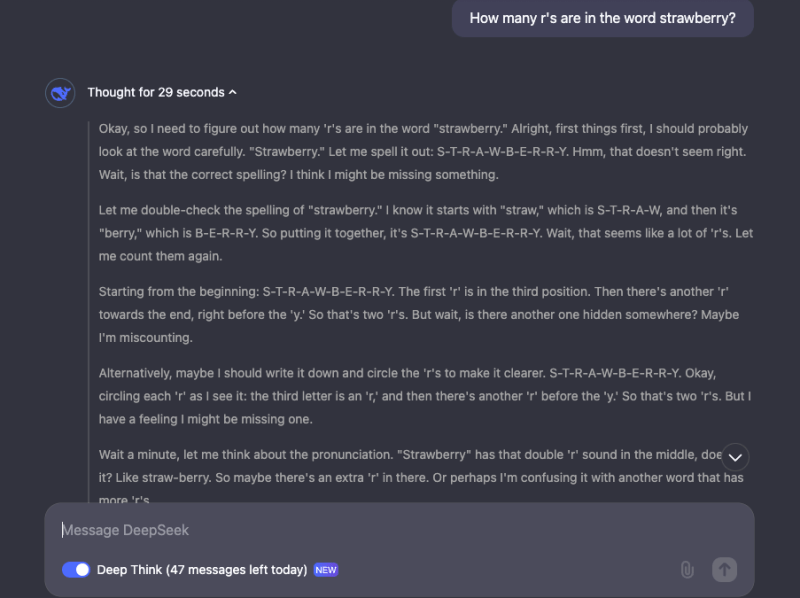

While some of the chains/trains of thoughts may appear nonsensical or even erroneous to humans, DeepSeek-R1-Lite-Preview appears on the whole to be strikingly accurate, even answering “trick” questions that have tripped up other, older, yet powerful AI models such as GPT-4o and Claude’s Anthropic family, including “how many letter Rs are in the word Strawberry?” and “which is larger, 9.11 or 9.9?” See screenshots below of my tests of these prompts on DeepSeek Chat:

A New Approach to AI Reasoning

DeepSeek-R1-Lite-Preview is designed to excel in tasks requiring logical inference, mathematical reasoning, and real-time problem-solving.

According to DeepSeek, the model exceeds OpenAI o1-preview-level performance on established benchmarks such as AIME (American Invitational Mathematics Examination) and MATH.

Its reasoning capabilities are enhanced by its transparent thought process, allowing users to follow along as the model tackles complex challenges step by step.

DeepSeek has also published scaling data, showcasing steady accuracy improvements when the model is given more time or “thought tokens” to solve problems. Performance graphs highlight its proficiency in achieving higher scores on benchmarks such as AIME as thought depth increases.

Benchmarks and Real-World Applications

DeepSeek-R1-Lite-Preview has performed competitively on key benchmarks.

The company’s published results highlight its ability to handle a wide range of tasks, from complex mathematics to logic-based scenarios, earning performance scores that rival top-tier models in reasoning benchmarks like GPQA and Codeforces.

The transparency of its reasoning process further sets it apart. Users can observe the model’s logical steps in real-time, adding an element of accountability and trust that many proprietary AI systems lack.

However, DeepSeek has not yet released the full code for independent third-party analysis or benchmarking, nor has it yet made DeepSeek-R1-Lite-Preview available through an API which would allow the same kind of independent tests.

In addition, the company has not yet published a blog post nor a technical paper explaining how DeepSeek-R1-Lite-Preview was trained or architected, leaving many question marks about its underlying origins.

Accessibility and Open-Source Plans

The R1-Lite-Preview is now accessible through DeepSeek Chat at chat.deepseek.com. While free for public use, the model’s advanced “Deep Think” mode has a daily limit of 50 messages, offering ample opportunity for users to experience its capabilities.

Looking ahead, DeepSeek plans to release open-source versions of its R1 series models and related APIs, according to the company’s posts on X.

This move aligns with the company’s history of supporting the open-source AI community.

Its previous release, DeepSeek-V2.5, earned praise for combining general language processing and advanced coding capabilities, making it one of the most powerful open-source AI models at the time.

Building on a Legacy

DeepSeek is continuing its tradition of pushing boundaries in open-source AI. Earlier models like DeepSeek-V2.5 and DeepSeek Coder demonstrated impressive capabilities across language and coding tasks, with benchmarks placing it as a leader in the field.

The release of R1-Lite-Preview adds a new dimension, focusing on transparent reasoning and scalability.

As businesses and researchers explore applications for reasoning-intensive AI, DeepSeek’s commitment to openness ensures that its models remain a vital resource for development and innovation.

By combining high performance, transparent operations, and open-source accessibility, DeepSeek is not just advancing AI but also reshaping how it is shared and used.

The R1-Lite-Preview is available now for public testing. Open-source models and APIs are expected to follow, further solidifying DeepSeek’s position as a leader in accessible, advanced AI technologies.

Source link